Read the full article on Moonsong Labs

Introduction

The inspiration for this post was Vitalik Buterin’s framework for categorizing Machine Learning opportunities in Web3 (originally published in January 2024). In his framework, he outlines four key intersections between AI and crypto: AI as a player, as an interface, as the rules, and as the objective of blockchain systems. In this series, we’ll explore the obstacles and opportunities we encountered while testing these concepts, outline our methodology for building and refining an inference pipeline for Web3. Additionally, we’ll present ideas for further exploration, inviting builders to take these concepts forward.

To kick things off, let us introduce Unravel, a prototype developed to test whether machine learning used to decode transaction data can outperform algorithmic labeling, making Ethereum transactions more human-readable.

If you prefer to explore firsthand, check out the demo at unravel.wtf — we welcome your feedback! But be sure to return to learn more about what we’ve built.

Permissionless Systems as Machine Learning Interpretability Playgrounds

One of the core appeals of blockchains is transparent execution and settlement, intuitively part of what makes them “trustless”.

User intentions on-chain are typically inferred from a series of contract calls which should, in principle, be independently verifiable through transparent traces left by their execution flow. These traces can be examined using blockchain explorers or by directly accessing the chain, making them truly transparent.

However, this ideal of transparency isn’t always realized in practice. When transaction information is readily available, most engineers can follow the flow of typical transactions, yet less savvy users would not be able to. There are scenarios where even experienced engineers can struggle to interpret complex transactions, especially when multiple contract calls are involved—some of which may lack publicly available source code or contain several internal functions. Examples include proxy contracts, account abstraction implementations, or aggregators.

This means that Ethereum users, both experts and novices, often have only a partial understanding (typically based on explorer-provided tags and labels) as to what a transaction is really doing.

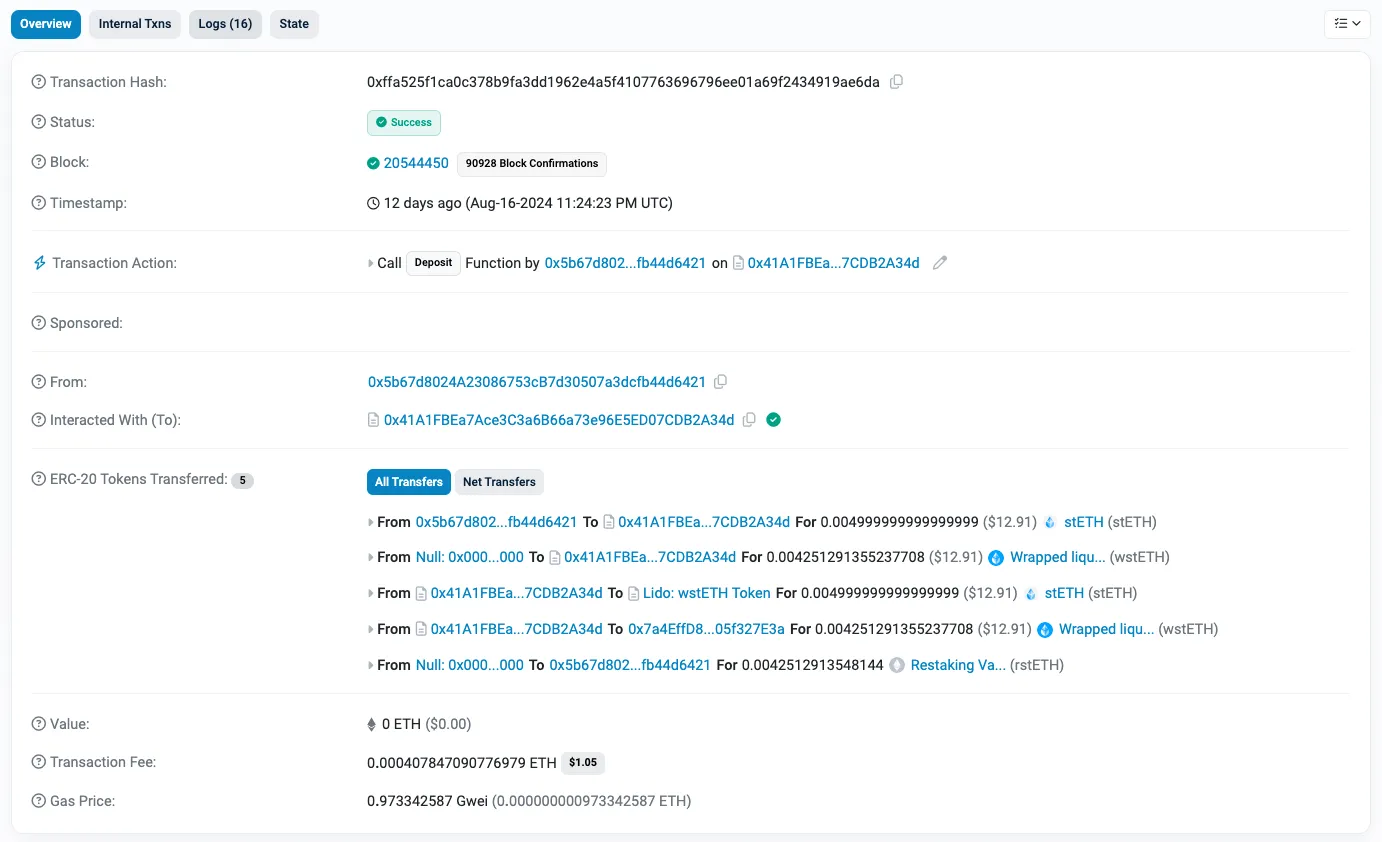

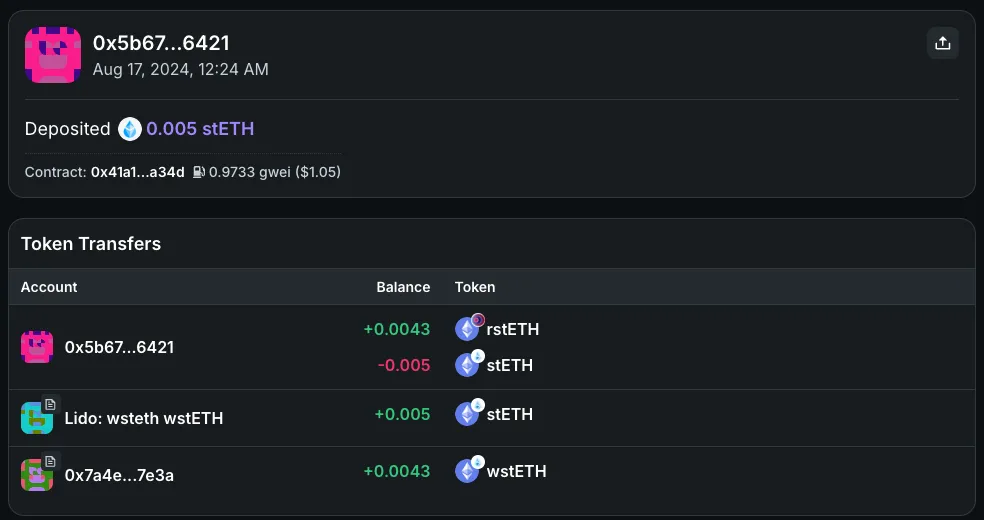

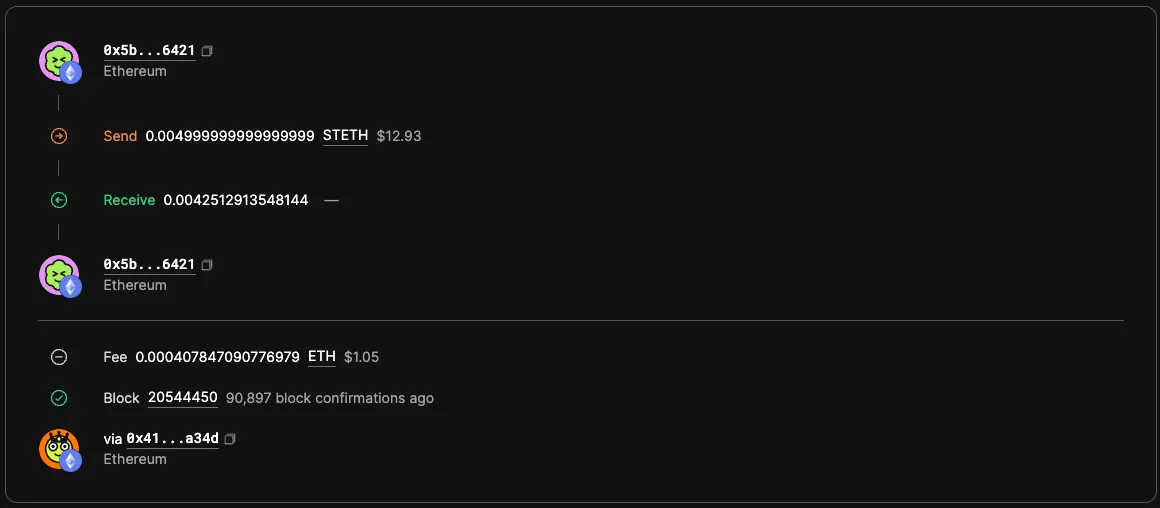

Let’s take this transaction as an example: https://etherscan.io/tx/0xffa525f1ca0c378b9fa3dd1962e4a5f4107763696796ee01a69f2434919ae6da

How would we interpret this?

- First, this transaction generated 76 internal transactions and 16 events in the logs, that is a lot!

- Etherscan labels it as a “Deposit” and points us to inspect one of the contracts we are depositing into in order to understand what it did.

- We can also see Lido stETH and wstETH being transferred, so we can start piecing together what this transaction might mean.

- If we follow the contract address, we’ll find 25 contracts; a quick scan through them and we identify a Vault contract we called & slowly we can come to our “partial understanding” of it.

Yet we are not 100% certain; we don’t have time to read all of this code and review all of those logs!

Note: the same is true for many other portfolio management tools and explorers.

|  |

|---|---|

| View on Zapper | View on Ondora |

One approach to improve on this is through algorithmic labeling. For instance, we could develop a parser for well-established patterns like “account abstraction”, or a parser for Lido-related transactions or even label the Vault contract we have encountered. With published specifications for such patterns, we could build, test, and deploy a system to dynamically label transactions. This method is particularly effective when developers adhere to strict standards (such as Ethereum Improvement Proposals or EIPs).

However, in a permissionless system, if the goal is to build a generic solution applicable across various transaction patterns (past, present and future), we don’t always have the luxury of predetermined specifications or advance notice of new patterns. Some standards are adopted by consensus, others go through formal processes like the EIP, and some gain traction organically when they achieve critical mass (e.g., LayerZero OFTs).

In our example, the user has interacted with a product that composes with Lido – Mellow Finance – so our approach wouldn’t quite work there unless we have some prior knowledge about it.

An alternative approach might be to crowdsource the labeling, similar to Zapper Protocol. This approach is interesting, as it relies on those that might have the context or expertise to understand the transaction (e.g. if you have approved it), and for some labels this might be a good way to derive what the intent “should have been” but it still doesn’t unravel the complexity of what actually happened.

In short, from our research, for systems where inputs cannot be controlled or predicted, purely algorithmic approaches for labeling aren’t going to work across the board.

What’s needed is a method to dynamically improve the understanding of transactions as more are observed, capable of detecting and internalizing emerging patterns, and a mechanism to validate these learnings to improve interpretation accuracy over time.

A generic transaction decoder for a permissionless system presents an ideal use case for machine learning. Such an approach could adapt to the evolving landscape of blockchain transactions, learning from new patterns as they emerge and providing more accurate and comprehensive interpretations as it processes more data.

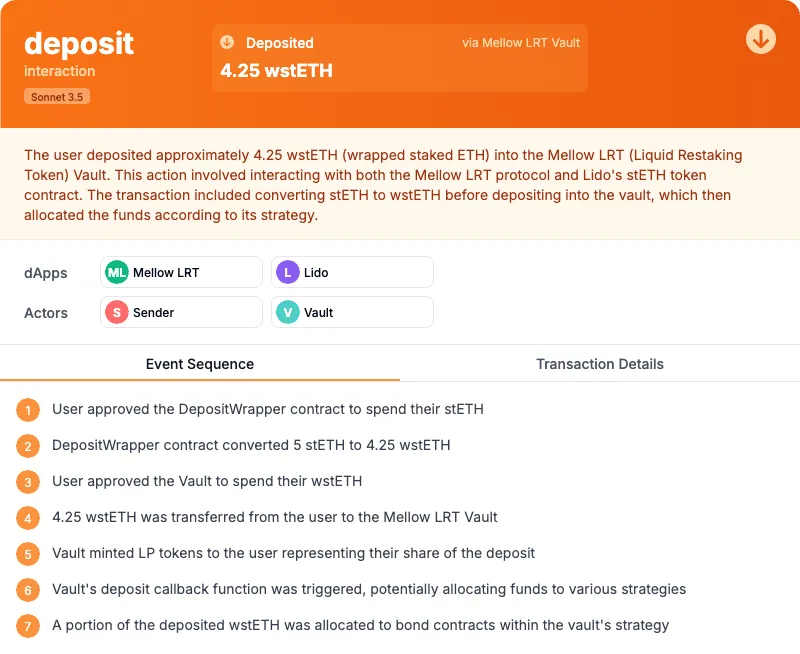

This is what an LLM would understand from our same example transaction, want to know how? Continue reading!

Unravel: A Prototype to test Machine Learning Transparency

Unravel was born from a simple question: Can existing State of the Art Large Language Models match or surpass algorithmic labelers (like Etherscan) in helping users understand blockchain transactions?

To test this concept, we developed a simple inference pipeline to derive a transaction intent for public on and off chain information. Let’s break it down.

Step 0: Retrieving the “raw” Data and assembling it

The process starts with a data pipeline designed to collect, clean, and store the relevant on-chain data for each transaction. This includes:

Transaction metadata: Key details of the transaction itself. Call traces: Information tracking the execution of smart contracts. ABIs (Application Binary Interfaces): Interface data for interacting with smart contracts. Contract source code: The underlying code of the smart contracts involved. In addition to on-chain data, we also compiled a dataset of publicly available off-chain labels via web crawling to improve our intent interpretation and reduce hallucinations during inference. These labels consist of metadata, such as full names, descriptions, symbols, types, decimals etc… which are helpful for identifying and contextualizing on-chain entities like contracts and tokens.

We assemble all of this data in a call tree and we start to infer/decode what each of those items mean in the context of the transaction.

Step 1: Unraveling the call tree using LLMs

Whenever we encounter a contract or function for the first time, we initiate a unraveling process, which consists of:

- Profiling each contract

This involves cleaning the source code and passing it to a language model (LLM) to generate a detailed profile. The profile includes the contract’s purpose, key components, major interactions, state changes, and events. This condensed “input” serves as a reference for subsequent stages in the pipeline.

- Evaluating functions in the context of their contract

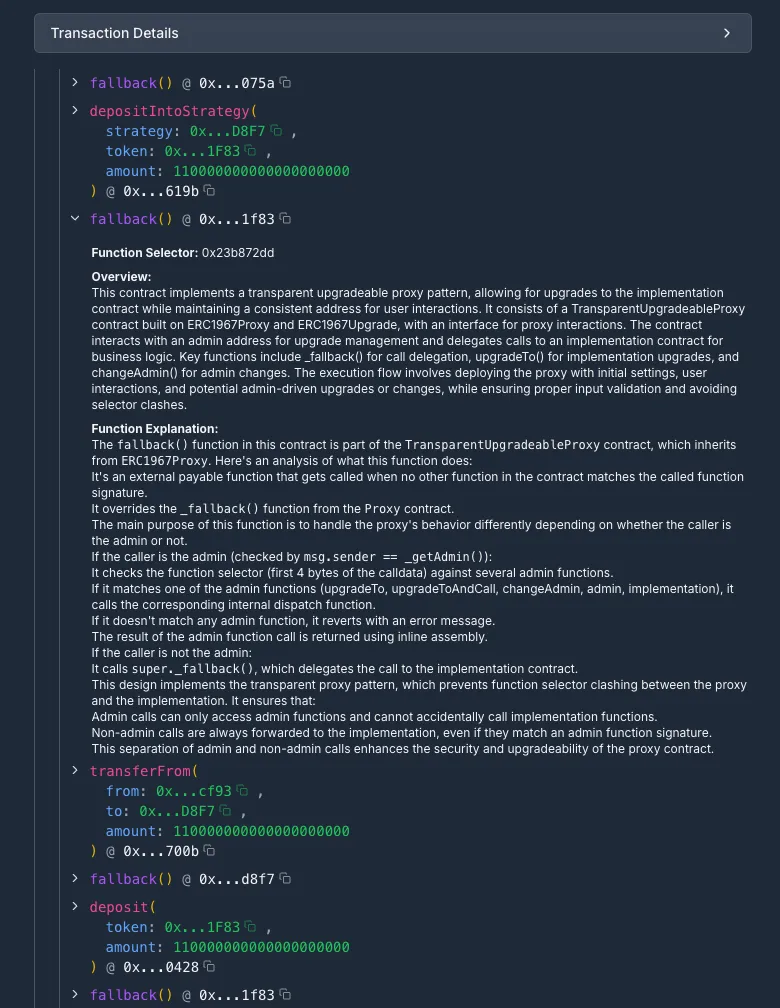

With this contextual understanding, we evaluate each function individually with an LLM. We begin by identifying the function tree (i.e., the hierarchy of function calls) and then analyze the role of each argument to determine the function’s behavior. This information is added to our call tree as we progress the broader unravel knowledge base.

- Appending all information to the call tree

Finally, we update the call tree with our explicit intermediate interpretations forming the call tree you see in Figure 2. (Note: at this stage it is important to remain within the context window of the LLM you are using, in our tests we found better performance if the context is kept as small as possible). This intermediate result is the context we use to ask the final question to the LLM, what was the intent behind this transaction?

Step 2: Intent Extraction and presentation

This step serves two purposes: first, we need the interpretation to summarize accurately the information from a much larger body of text (our call tree), and second, we need the output to be “human-readable”, which is defined not just by the information we extract but also how it is presented to the user.

We have experimented with component-driven adaptive UI approaches (as opposed to fully generative UI) to meet this criteria. Adaptive UI simply means pre-defining a schema that outlines the expected “fields” of our interface, then using an LLM call to generate the structured outputs to feed the fields required.

In this simple example, we have implemented fuzzy finding for the right icon to match the intent generated by the LLM, we made space for an arbitrary number of actions or elements, and in some cases narrowed down the generation of a field to an LLM classifier (say category of an intent which has to be one of 4: transfer, interaction, exchange, and unknown).

With today’s LLMs, we found adaptive UI to perform better than fully generative AI, but recent progress might make a fully dynamic approach feasible for future versions. In such a scenario, a simple feedback loop could land you on the best data representation based on user feedback alone for an arbitrary number of data representation scenarios, unknown at the time of development.

Our schema design was inspired by Zapier’s work on crowdsourcing event interpretation. Through our testing, we found that a few key components were essential for defining the core aspects of an intent:

- Single-word identifier: This serves as a quick cue, especially useful in navigating large lists.

- Category of interaction: A visual identifier that distinguishes between different types of interactions, such as unilateral actions, value exchanges between parties, or transfers of value to or from a party.

- Core events: Defined by an action verb, an amount, an item, and the protocol/application facilitating the event. These components form the backbone of our intent definition.

We further tested the extraction of actors and protocols to enhance the inspection and searchability of intents. To provide a clearer understanding of “how” the intent was achieved—beneficial for both users and the LLM reasoning through “chain-of-thought”—we summarized the call tree into a sequence of human-readable events. This summary captures the essence of the sequence rather than detailing each individual call, offering a concise narrative of the transaction’s flow.

Finally, let’s compare the before and after results side by side (for good and bad) to see the improvements.

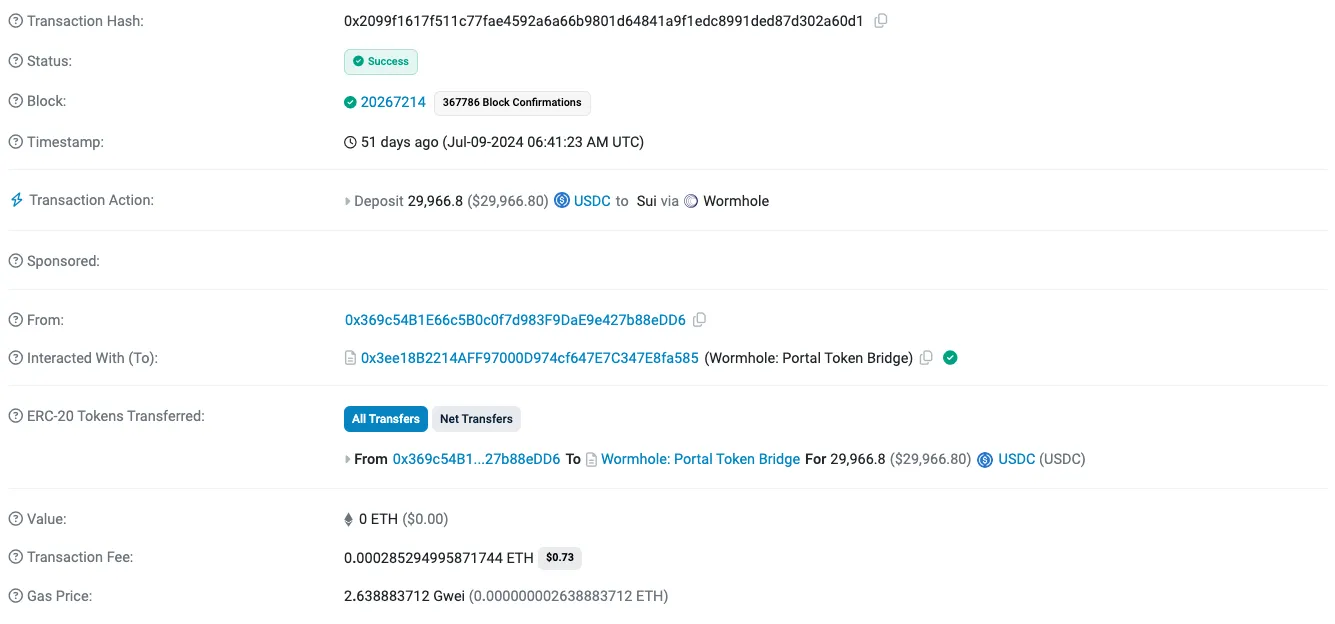

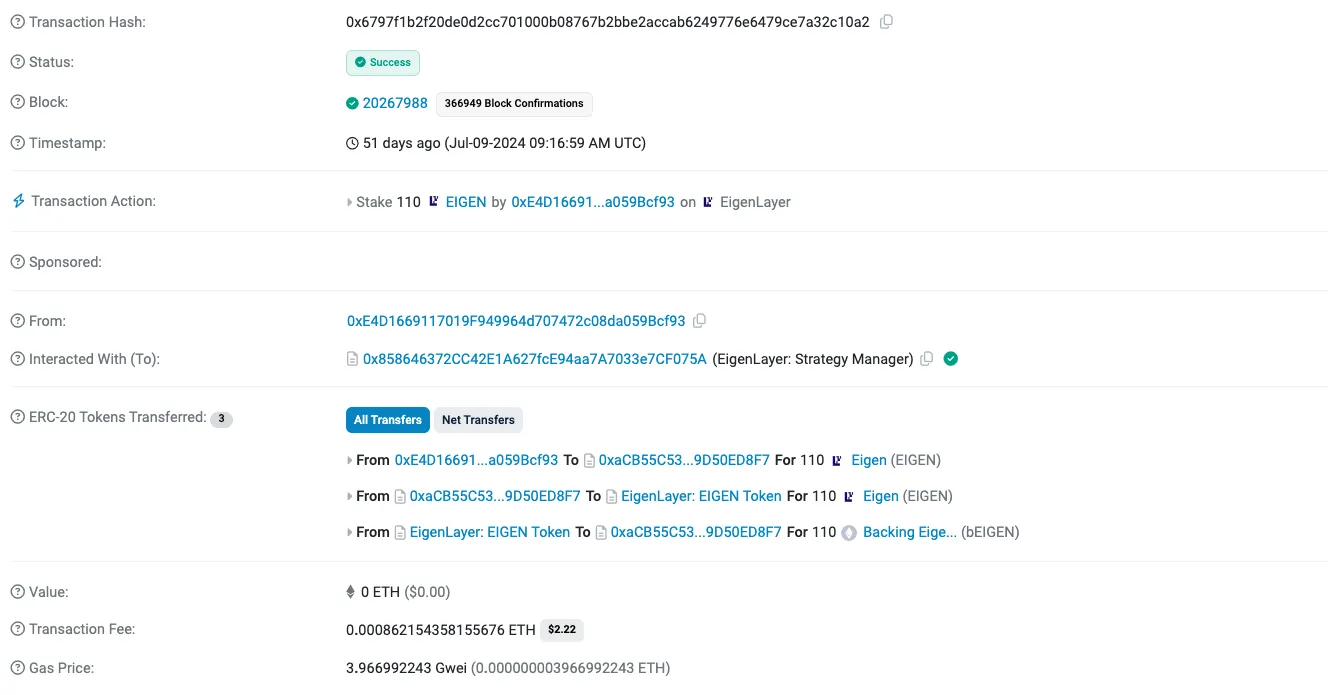

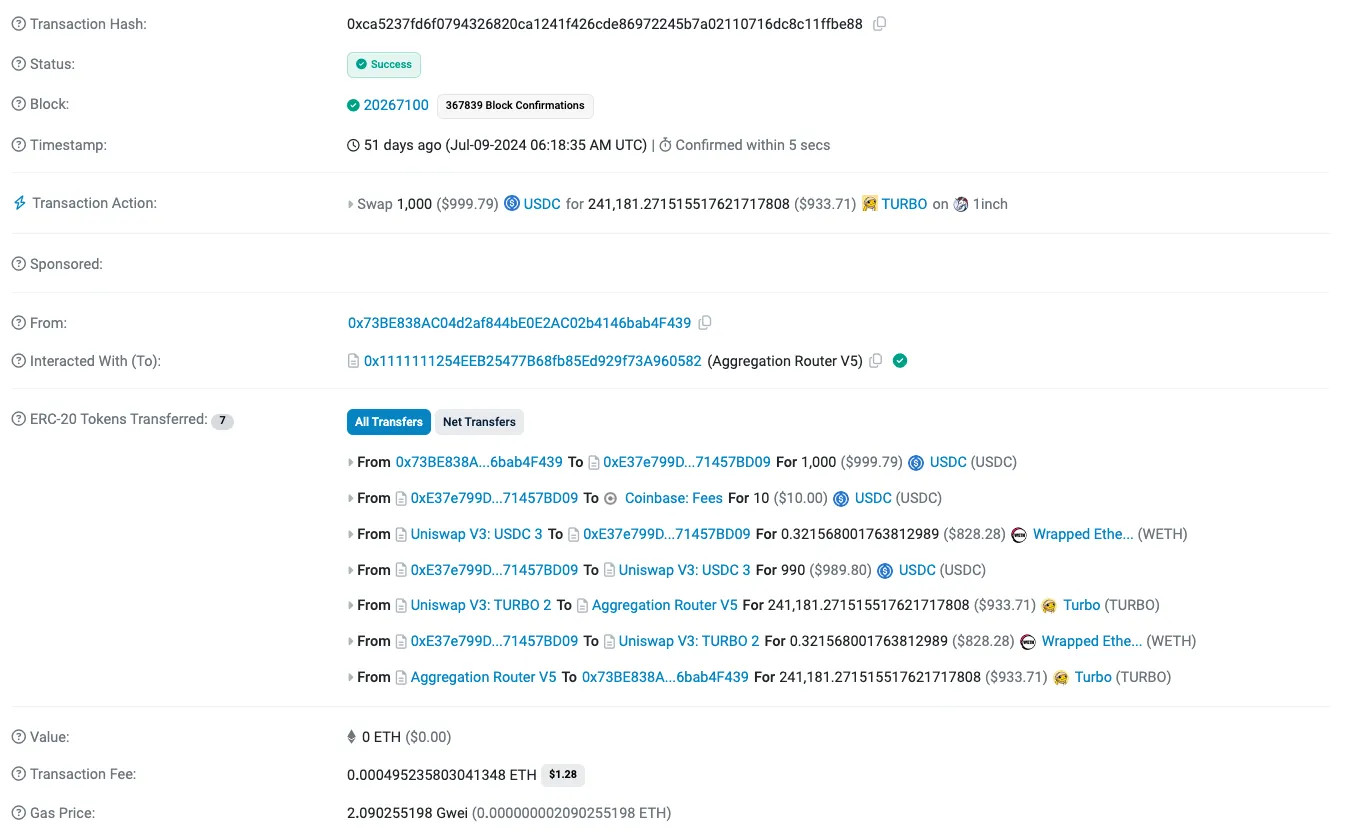

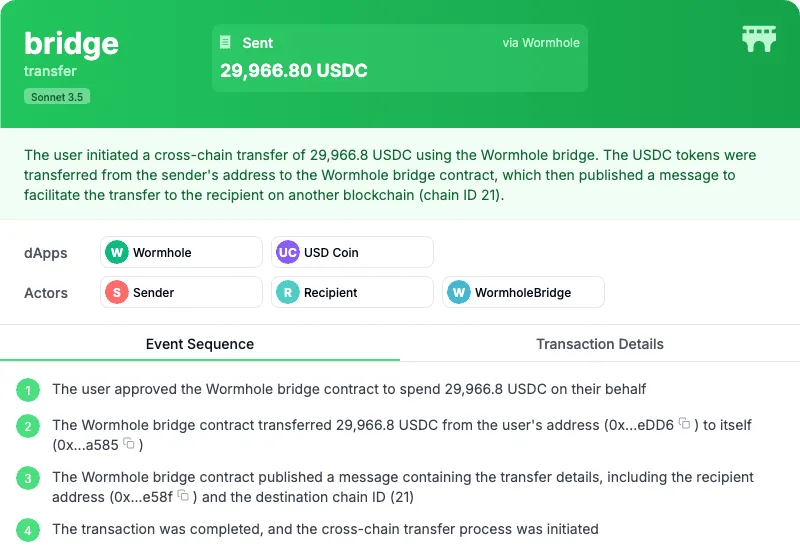

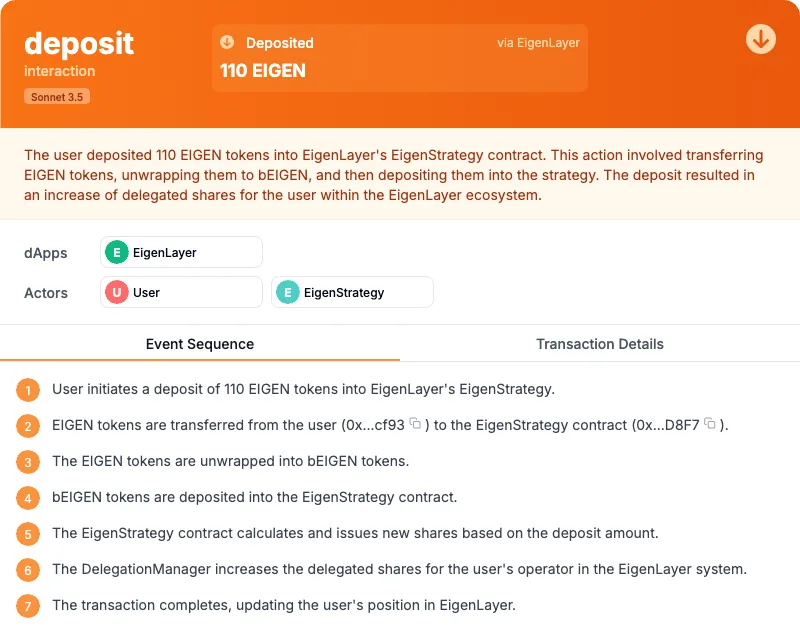

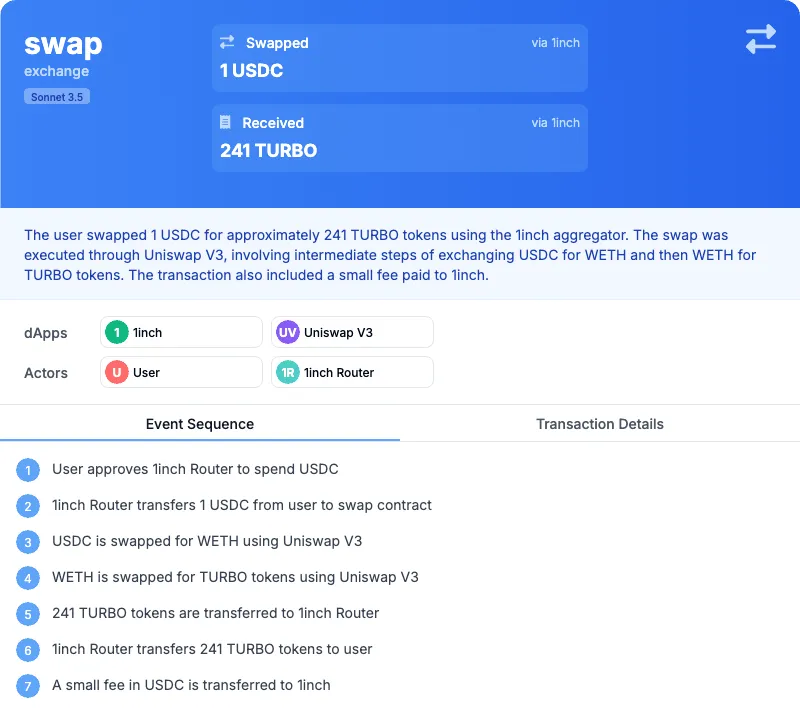

| Example 1 | Example 2 | Example 3 | |

|---|---|---|---|

| Etherscan |  |  |  |

| Unravel with Anthropic Claude Sonnet 3.5 |  |  |  |

| Comments | This is an example where LLM not having access to a rich enough off chain dataset wasn’t able to infer the meaning of Chain ID 21, which is not on-chain. | As you can see, here the labels of Etherscan did a good job at classifying this transaction, yet the breakdown of the sequence made it clear to the user why the transaction involved so many calls. | This is a false positive example, as you can see here the LLM misunderstood the decimal place of the USDC ERC20 contract |